OpenAI, Sora, and what generative video AI models might mean for all of us

I don't know anyone who thinks the world is ready for convincing AI-generated video.

On Thursday, Sam Altman, the CEO of OpenAI, announced that the company has a new product called Sora that can generate up to 60 seconds of photorealistic video from a text prompt. The company released a series of videos it says were created with Sora, and they are impressive.

Until now, most of the way most people interact with generative AI has been through experiences like ChatGPT or Google's Gemini which allow users to enter text into a prompt and get back a text response. Or, perhaps you've used a tool like Dall-E or Midjourney to create images.

This feels different, not just because of the technical aspects of pulling off convincing video, but also because of what it means for all of us. It doesn’t seem like it will be long before we’re asking ourselves whether this is a tool we should have unleashed.

Video is--for obvious reasons--a much harder problem to solve. Still, what OpenAI has shown of Sora so far is pretty wild. The video above was created from the prompt "Beautiful, snowy Tokyo city is bustling. The camera moves through the bustling city street, following several people enjoying the beautiful snowy weather and shopping at nearby stalls. Gorgeous sakura petals are flying through the wind along with snowflakes."

In addition to the videos, the company included in its announcement, Altman was taking prompts on X and sharing the results all afternoon. The idea that you can type words into a browser window and a computer will give you back a 60-second photorealistic video is mind-blowing.

But--and this is an important point--these are highly controlled demos. No one outside of OpenAI is able to type prompts in to test its abilities. Journalists who were briefed on the demos were not able to try it for themselves, probably because Sora isn't yet at a point where it consistently produces good results. Even Altman's examples from user prompts were curated.

There are really two very different things here, and both are worth considering. The first is that this is an entirely new front for AI. The fact that Sora can produce these results--even occasionally--is both compelling and concerning. OpenAI says it is allowing safety researchers to use Sora in order to create boundaries and limits--presumably because we're about to enter what is surely going to be the most contentious presidential election season ever, and Altman does not want to be sitting before Congress explaining why it isn't OpenAI's fault that its product was used to influence the outcome.

The other is that I have a lot of questions. How long do these videos take to generate? Hours? How consistent is Sora at producing the kind of results the company is showing off as demos? And, maybe more importantly, how will OpenAI prevent them from being used for the inevitable: misinformation and abuse?

I guess there's another obvious question: What is OpenAI using to train Sora? In a statement to Wired, Bill Peebles, who co-led the project, said, "The training data is from content we've licensed and also publicly available content." He did not say what "publicly available content" means, or whether that includes copyrighted material that happens to be on the internet.

While most people will, rightfully, be focused on the questions of copyright infringement and misinformation, I actually think the second question is more interesting--at least from the perspective of the promise OpenAI is making. Generative AI is notoriously bad at getting the details right. That's true if you're typing things into ChatGPT or Google's Gemini, both of which will just make things up with no relationship to reality. It's also true for photos--and now videos.

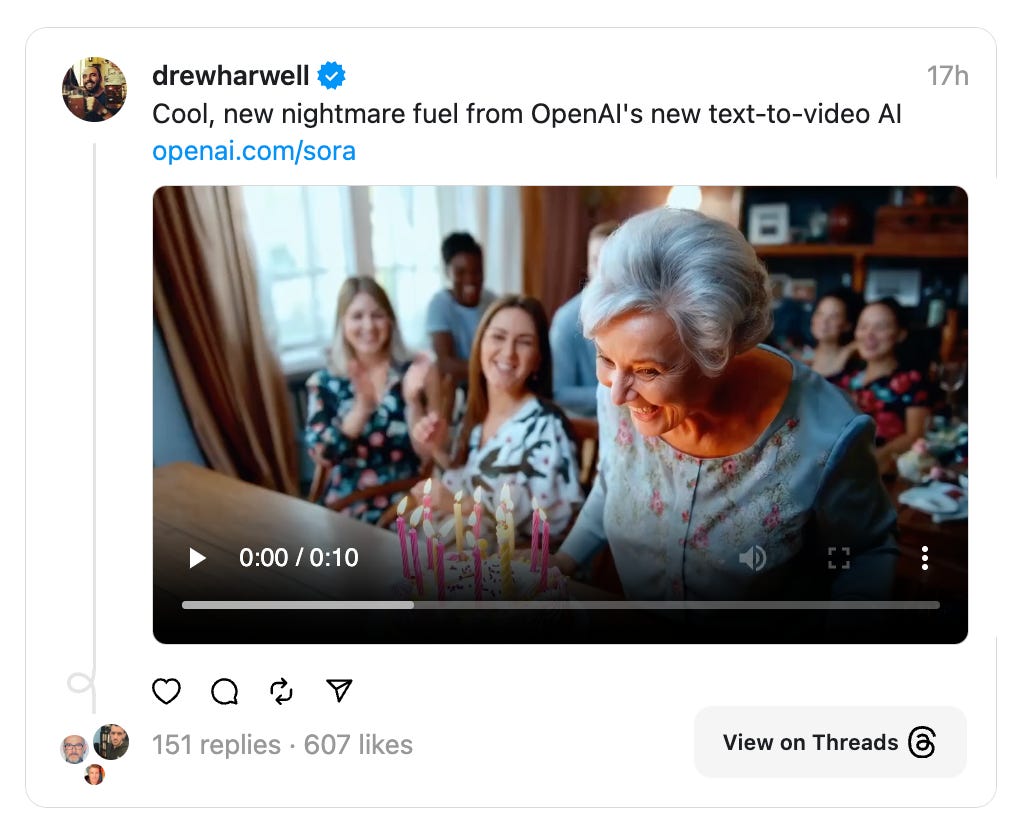

One of the examples shown was of an older woman and a cake, presumably for her birthday. Around her are other people clapping. Except, one of the women in the video isn't actually clapping, and her hands move in ways that could only be possible if she has no bones in her fingers. You don't notice it at first, but once you do, you cannot unsee it.

I'm not criticizing Sora for not being perfect. It isn't even available as a product yet, and when you think about what it's doing, the results so far really are incredible. I do think, however, that OpenAI is creating an expectation, and it'll be very interesting to see whether it can live up to the promise it is making.

That's just a thing that happens with almost every new product. The company that makes it will roll out very controlled demos to show off its best capabilities. That just makes sense, but it risks a letdown if the finished product doesn't live up to those expectations every time.

Apple's Vision Pro headset is a great example. I was one of the people who had a chance to use it back in June at Apple's developer conference, WWDC, and I was blown away by how good it was. That impression came after a 30-minute demo, however. I only saw what Apple wanted me to see.

Now that the product is available to anyone, people are poking at its limits and finding out where it doesn't live up to the expectations. Sure, it's still a pretty incredible piece of technology, but the story has gone from how mind-blowing it is, to how people are returning them en masse because they can't see themselves using it.

Ultimately, the lesson here is simple: be careful with the expectations you set. It's not surprising that OpenAI wants to show off how impressive Sora is, but once it's in the hands of 100 million users, their experience is going to be wildly different. If that experience doesn't live up to the promise, that's a lot of disappointed users.

This first appeared at my Inc.com column.

Primary Technology Episode 008

Be sure to check out the latest episode of Primary Technology the show Stephen Robles and I do each week, where we talk about the tech stories that matter.

You can also listen wherever you get podcasts:

Apple Podcasts / Spotify / PocketCasts / Overcast / primarytech.fm

Mark Zuckerberg Responds to Vision Pro

There are two things you can learn from Mark Zuckerberg's video review of the Vision Pro that he shared on Instagram. The first is that Zuckerberg seems a little worried that people might think Vision Pro is a better version of Meta's Quest headset. Why else does he bother making this video highlighting the contrast between the two devices and proclaiming the Quest is the better product?

The second and more interesting thing is that Zuckerberg is right. At least, he's right about one important assertion he makes at the beginning.

"I have to say that, before this, I expected that Quest would be the better value for most people since it's really good and it's like seven times less expensive," Zuckerberg begins. "After using it, I don't just think that Quest is the better value; I think Quest is the better product, period."

That, to be fair, is the type of thing you'd expect to hear from a competitor talking about their own product. But Zuckerberg goes on to explain why, and this is where I don't think there's any question he is right:

"Quest is better for the vast majority of things that people use mixed reality for," Zuckerberg says.

Look, that's obviously true because most of the people who use mixed reality are using a Quest 2 or a Quest 3. They are, without question, the most popular consumer headsets you can buy. They may not be the highest quality, but they are fine for what people use them for, and Meta has established itself as the biggest platform.

The things people use mixed-reality or virtual reality (VR) headsets for are naturally going to be limited by the fact that you have to wear them on your head, and most people aren't going to do that for anything but playing games or maybe watching a movie. They're not going to replace our laptops or smartphones anytime soon because those devices are just better at what they do. Also, they're less awkward to use.

Smartphones (for the most part) are still a thing that fits in your pocket. Smartwatches became mainstream because they are a thing you wear on your wrist, which is a place people are already used to wearing something. Headsets are a computer you wear on your face, which is just not a thing most people are going to want to do, meaning that the market for them is always going to be smaller.

The obvious exception to that is if someone eventually figures out how to miniaturize the technology enough that it fits into a pair of reasonable-looking glasses that provide a sort of heads-up display with information that augments the real world you're looking at. That, however, is just not something we're going to see anytime soon.

Of course, Zuckerberg also couldn't help but take a few, not-so-subtle digs at Vision Pro's biggest weaknesses. "There are no wires that get in the way when you move around," Zuckerberg said about the Quest 3. "It's a big deal."

But those criticisms aside, the core problem of the Apple Vision Pro isn't that it has an external battery, but that it's a very expensive product for something most people don't know what to do with. It is, by all accounts, the very best version of this type of product that has ever been made, at least, in terms of technology. But that alone doesn't make it useful. That's a tough sell for something that costs $3,500.

The Apple Watch didn't do much well when it first came out besides tell time. The software was bad, and Apple hadn't yet figured out that its strength was as a health device. It was also only $350, and, well, it did tell time. It was expensive for a digital watch, but it wasn't prohibitively expensive. It wasn't a terrible expense if you weren't sure what to do with it.

The Vision Pro is different. If you don't already have a plan for something, $3,500 is a lot of money to spend. Also, if you're considering buying a Vision Pro, there is a good chance you already have an iPhone and a Mac, both of which you know what to do with, and both of which do a lot of things better. If you just want a nice headset for the types of things people use headsets for, Zuckerberg is probably right, the Quest is a better product.

But I don't think Apple thinks of it as a device people buy to do typical VR things like play Beat Saber. In fact, playing games on Vision Pro would be a nightmare for the most part. There isn't even a great input interface for playing games. You can pair it to a Bluetooth controller, but the reason people like playing games on the Quest 3 is that it includes hand controllers and the games are designed around that input device.

I think Apple means it when it talks about Vision Pro as a spatial computing device. The thing is, that sounds very cool, but it's not a thing people do. At least, not yet. And getting people to buy a device to do something they don't already do is hard when that device is $3,500. Apparently, if reports that buyers are returning their Vision Pros are true, getting them to keep them is also a real challenge. That's not great news for Apple.

Other stories you might like:

Lyft’s CEO apologizes for $2B oops. (Business Insider)

Meta and Apple are trading words over the App Store… again. (TechCrunch)

Apple released a paper on its own text-to animation AI model. (The Verge)

Apple is also removing Home Screen apps in the EU (9to5Mac)

Microsoft is bringing Xbox games to Playstation and Switch (Daring Fireball)